Using and creating containers using Docker is cool. Docker has 7 different ways to set up networks within it. In this lab, I’m going to show you 2 – the Default and the User Defined networks to experiment with. The rest will come with more time in the saddle. Have a look and I hope you enjoy it!

Requirements: VM

New Ubuntu Install

Procedures

Update Ubuntu with the following command:

sudo apt-get update && sudo apt upgradeNetwork 1 – Default Network

Have a look at what’s going on under the hood on the network:

ip address showWith the Ubuntu VM running, going into Virtual Box and click on “Settings” Then go to “Network” and in the dropdown menu, change the Attached to: Bridged Adapter Click “OK”

What this does is connects your VM directly to your home network. So input once more:

ip address showAnd you will see that the IP address changed for enp0s3. Now we can install Docker using Terminal in Ubuntu on VM:

sudo apt install docker.io -yUsing the command above we’ll see the docker0 interface in the output:

sudo docker network lsUsing the command above will list all our current Docker networks. You should see the network name and type – in this case ‘bridge’ which Docker created for you automatically.

Now we’re going to add some containers in Docker; they’re going to be BusyBox Docker images. What does that mean? BusyBox was created by Docker to help developers craft space-efficient distro’s. It combines many of the common UNIX utilities into a single small executable. It will allow us to create containers, open a shell and achieve some functionality.

Enter the command below:

sudo docker run -itd --rm --name x busyboxWhere -itd will make the containers interactable and detached – which means they will be running in the background. The –rm will make the container clean up after itself when we’re done with it – meaning it will delete files it no longer needs etc. We want to give it a name and then we implement the busybox container image.

Hit the UP arrow key and create another image with a different name:

sudo docker run -itd --rm --name y busyboxHit the UP arrow key again and create a different image with a different name:

sudo docker run -itd --rm --name z nginxLet’s take a quick pause to ensure they’re all up:

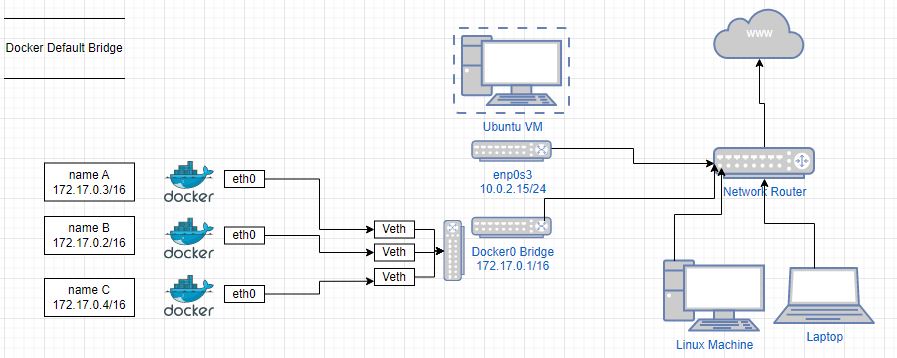

sudo docker psFrom a networking perspective, Docker is doing a lot of the heavy lifting for us. This is very cool. When we deployed the containers into the default network (–itd) Docker set up three virtual ethernet interfaces and connected it to the Docker0 bridge.

This acts like a switch and there’s a virtual ethernet interface for each individual container. When we input the following command, the actual names and connectivity to Docker0 is the output:

bridge linkWhile this bridge was undertaking its work, it was also handing out IP addresses – which means it’s also running DHCP! Wow. To see this on our network bridge, input the following command into your terminal:

sudo docker inspect bridgeWhat is even better is each of these containers has its own IP address inside the Docker0 network. And because BusyBox also uses DNS, it takes a copy of the etc/resolv.conf file from the host and puts it into each of the containers so they’re all using DNS. As the Docker0 network also acts like a switch, each container is able to communicate with each other!

So this is where some of the fun starts. Let’s jump into one of the containers to verify it can ping the other ones by entering the following command:

sudo docker exec -it namex sh (where namex is the name of your container)You’ll know you’re in the container when you see / # on your terminal. Go ahead and ping your other containers:

ping 172.17.0.4 Control C will stop the ping and display the results and you should see 0% packet loss.

I did a quick whoami to verify that I am indeed root. Cool.

Now lets verify we can ping the WWW and either ping your own website or Google:

ping 8.8.8.8Again – 0% packet loss. If we then input the following command:

ip routeWe can see something pretty interesting: Your containers’ Gateway is the Docker0 network using default settings and eth0 to get outside it’s own little world into the big bad WWW. How does it do that?? you may be asking: One of those little unix utilities again called NAT masquerade.

I don’t want to get too far off the path here but NAT masquerade is used to allow your private network to hide behind and be represented by something else. NAT routes traffic from the container to your system, which essentially makes the system the gateway for the container. The masquerade allows us to translate multiple IP addresses into anothe single IP address. Again, without going to far off the path, BusyBox IS busy and sets up eth0 automatically for us.

Back onto our path – because we forgot about the nginx – which by default is a website and it will use port 80. SOoooo can my home computer reach that website?? Back on your host computer pull up your favourite browser and input the ip address assigned to enp0s3 back at the beginning, put it into the url bar of your browser. There should be no joy here.

This needs to be fixed manually – that being you have to expose the port manually. And then after that we’re going to have to redeploy the nginx machine. To do that let’s stop the nginx machine with the following command:

sudo docker stop name zRedeploy our nginx machine but with another instruction in the command line to expose port 80 Input as follows:

sudo docker run -itd --rm -p 80:80 --name z nginxAnd then a quick check to see what changed: Input the following command:

sudo docker psYou’ll see the ports exposure now and you can refresh your page in your host computers url and you should see the message “Welcome to nginx” telling us nginx webserver has been successfully installed and working. Awesome. Below is a simple diagram of the Default bridge network created in Docker.

Network 2 – User Defined Network

This is all okay though – what we want to have here is some isolation between your containers and your network.

And isolation from the host too. Which is great for our lab!

So on to the next network type – user defined: We’re going to set up our own network instead of the

default network.To do this we are doing to create a ‘user-defined’ bridge.

Which is as simple as the following command:

Before doing this – you just need to think of a name for your network.

sudo docker network create 'nameofyourchoosing'Do a quick check:

ip address showAnother command:

network lsWhich reveals the Name chosen and network type

From here, because we’re no longer in default world – we get to take the bull by the horns and do something!

Let’s add some containers to our new network with the following command:

sudo docker run -itd --rm --network 'newnetworkname' --name 'ofyourchoice' busyboxAdd another busybox in there so noone is alone – hit the up arrow and change your name:

sudo docker run -itd --rm --network 'newnetworkname' --name 'ofyourchoice' busyboxLet’s have a look at our progress:

ip address showAnd use the “bridge link” command to see those new interfaces tied into the new virtual bridge we created.

Then we can inspect that bridge with the following command to view what IP address our new machines were assigned: (write these all down).

sudo docker inspect newnetworkname No doubt you might be wondering why we’re doing all this right. I’m personally just trying to

learn and to show what I’ve been learning along my journey. But the real point of all THIS is

ISOLATION.

What is so great about all this is that we get ISOLATION. Anyone in IT, networking,

cybersecurity, or sysadmins knows how important isolation is. What is great about

Let’s just demonstrate this real quick: Drop back into one of the default network machines and ping

one of our user-defined network machines by entering into the shell with the following command:

sudo docker exec -it namex shAnd ping our machine accross the hall in the other network and you should be getting 100% packet loss.

Bridges are the best network in Docker, especially if you’re going to be using Docker in production.

Another benefit of creating user defined networks is that you can ping using names instead of IP addresses.

The reason this is helpful, especially in a bigger network, as you deploy new workloads, the nature of DNS

is going to mean the IP addresses might change – but your user defined names won’t – so they’re easier

to find and manage.

To demonstrate this point, ‘exit’ out of your previous shell if it was in the default network, and

drop into another shell in your user defined network with the following command:

sudo docker exec it namea shThen input

ping namebAnd here you’ll see the name, along with the IP address being identified. Cool.